I have one doubt here, let assume if we got a huge file into blob storage and function app is taking more time to parse and insert into data base, then function timeout will happen. Thanks for this article, it is really informative. You can take a look at durable functions (), or you can use Azure Data Factory which can also work with triggers. If you know the function might timeout, there are some alternatives. In which container I have to upload that file ? That path points to a specific "folder" in a blob container in your storage account. When you create the function and choose blob trigger, you need to specify a storage account and a path. SO when I add a blob file the function to run. How can I make it run without starting the function locally.

AZURE FUNCTIONS FILEWATCHER CODE

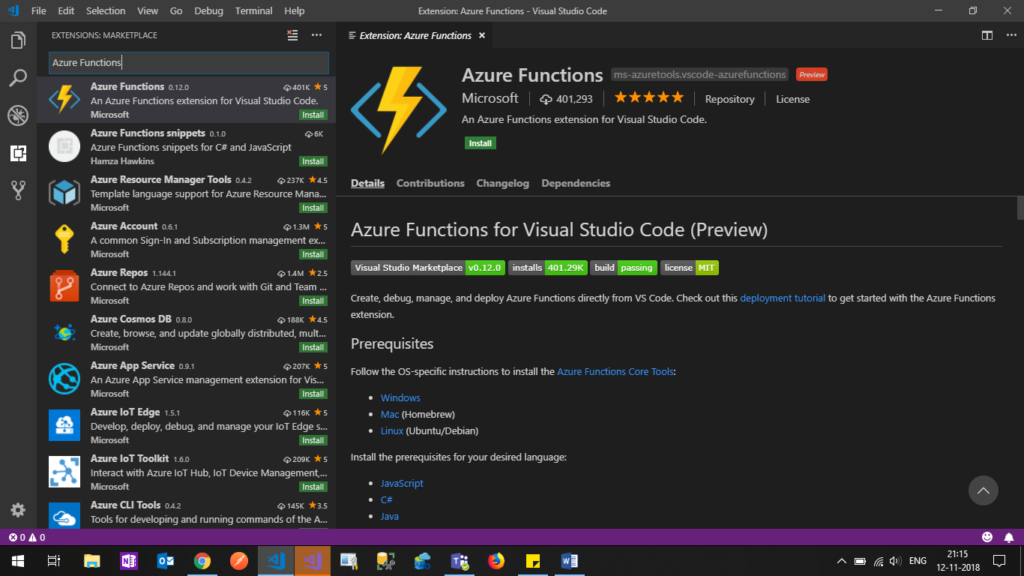

When I run the function from VS code everything works fine. I have created an azure function to get get triggered when a blob is added to a specific container. You'll need to deploy the Function to an Azure Function App. In Visual Studio, hitį5 or click on the "play" icon to start debugging. Now it's time to see if the code actually works. Return JsonConvert.DeserializeObject(trgArray.ToString()) Only include JValue types if (column.Value is JValue) Find the first array using Linq var srcArray = jsonLinq.Descendants().Where(d => d is JArray).First() įoreach (JObject row in srcArray.Children())įoreach (JProperty column in row.Properties()) Public static DataTable Tabulate( string json) SqlBulkCopy bulkcopy = new SqlBulkCopy(m圜onnectionString) īulkcopy.DestinationTableName = "topmovies" StreamReader reader = new StreamReader(myBlob) AddJsonFile( "", optional: true, reloadOnChange: true) SetBasePath(context.FunctionAppDirectory) Public static void Run([BlobTrigger( "functionstest/ Bytes") We're going to load a small Json file holding the top 250 movies. You can also create an Azure Functionĭirectly in the portal, but Visual Studio is preferred when you want easy source To create an Azure Function, we need the following prerequisites:Īzure Development workload enabled.

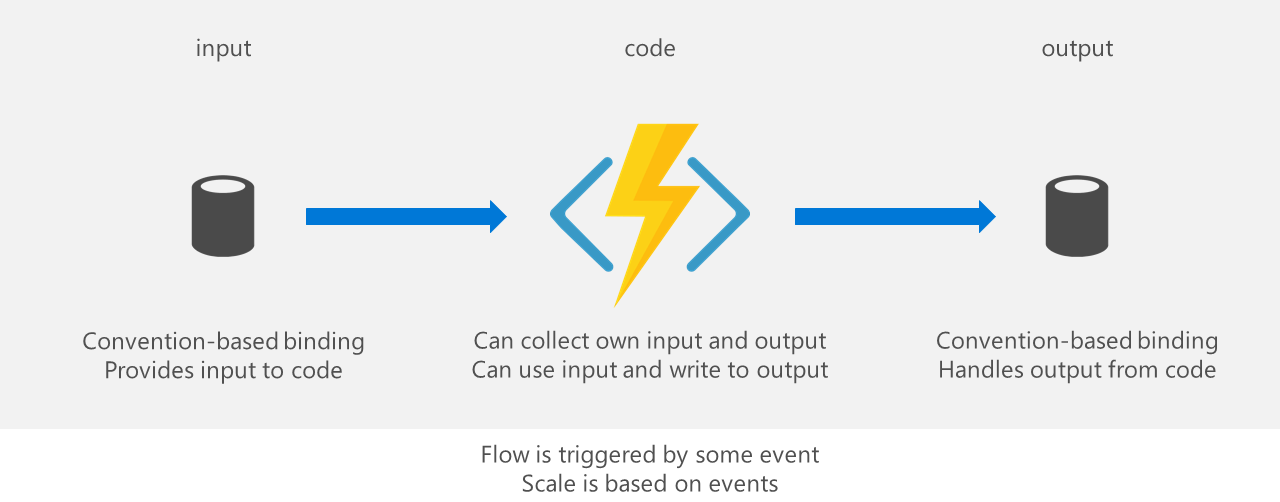

Even though there's some code involved, Azure Functions areĪ flexible and powerful tool and they are useful in your "cloud ETL toolkit". Will read a Json file from a blob container and write its contents to an Azure SQLĭatabase table. In this tip, we'll give you an example of a simple Azure Function which Run every time a new file is created in a blob container. This means the Azure Function will automatically There are many programming languages available and there's alsoĪ template for using a blob trigger.

ADF has supportĪzure Functions are little pieces of event-driven code which run on serverlessĬompute. Mapping data flow is a good alternative, but since this runs on top of anĪzure Databricks cluster, it might be overkill for a small file. Will process the file line by line, which is not optimal for performance.Īzure Data Factory is most likely the easiest tool to copy data into anĬopy activity doesn't allow for any transformations on the data. Not for writing data to Azure SQL Database. TheyĪre better suited though to process the contents of a file in its whole, and Transfer Files from SharePoint To Blob Storage with Azure Logic Apps. With these you can easily automate workflows without writingĪny code. In the Azure ecosystem there are a number of ways to process files fromĪzure Logic Apps.

0 kommentar(er)

0 kommentar(er)